Trust Everything, Everywhere

Unpolished thoughts and reflections on an emerging opportunity space

How can embodied artificial intelligences securely coordinate joint activity under adversarial conditions and what would it take for societies to trust them to do so?

What advantages for secure coordination do artificial intelligences have over humans, specifically how might they leverage programmable cryptography to enable new coordination protocols?

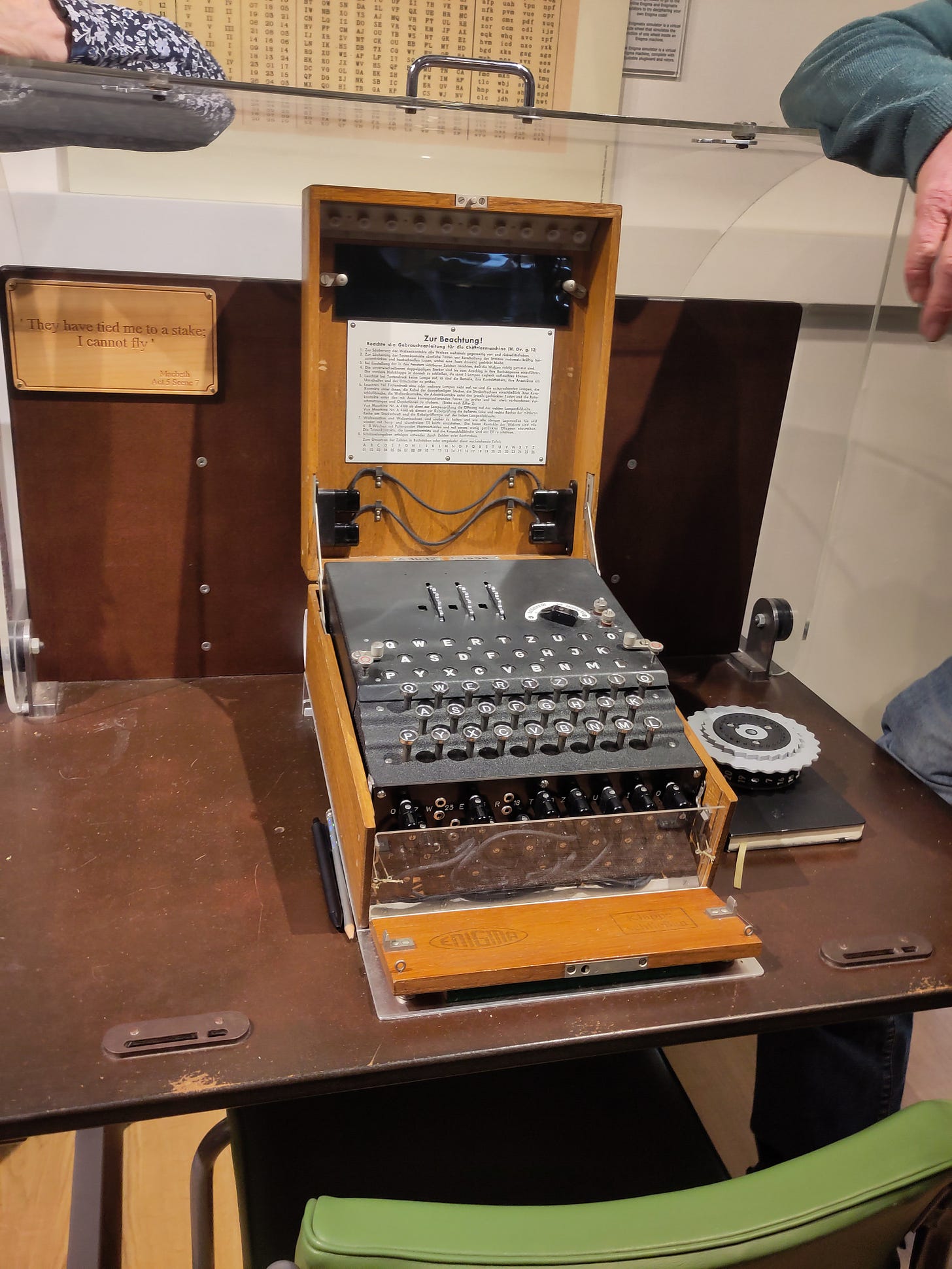

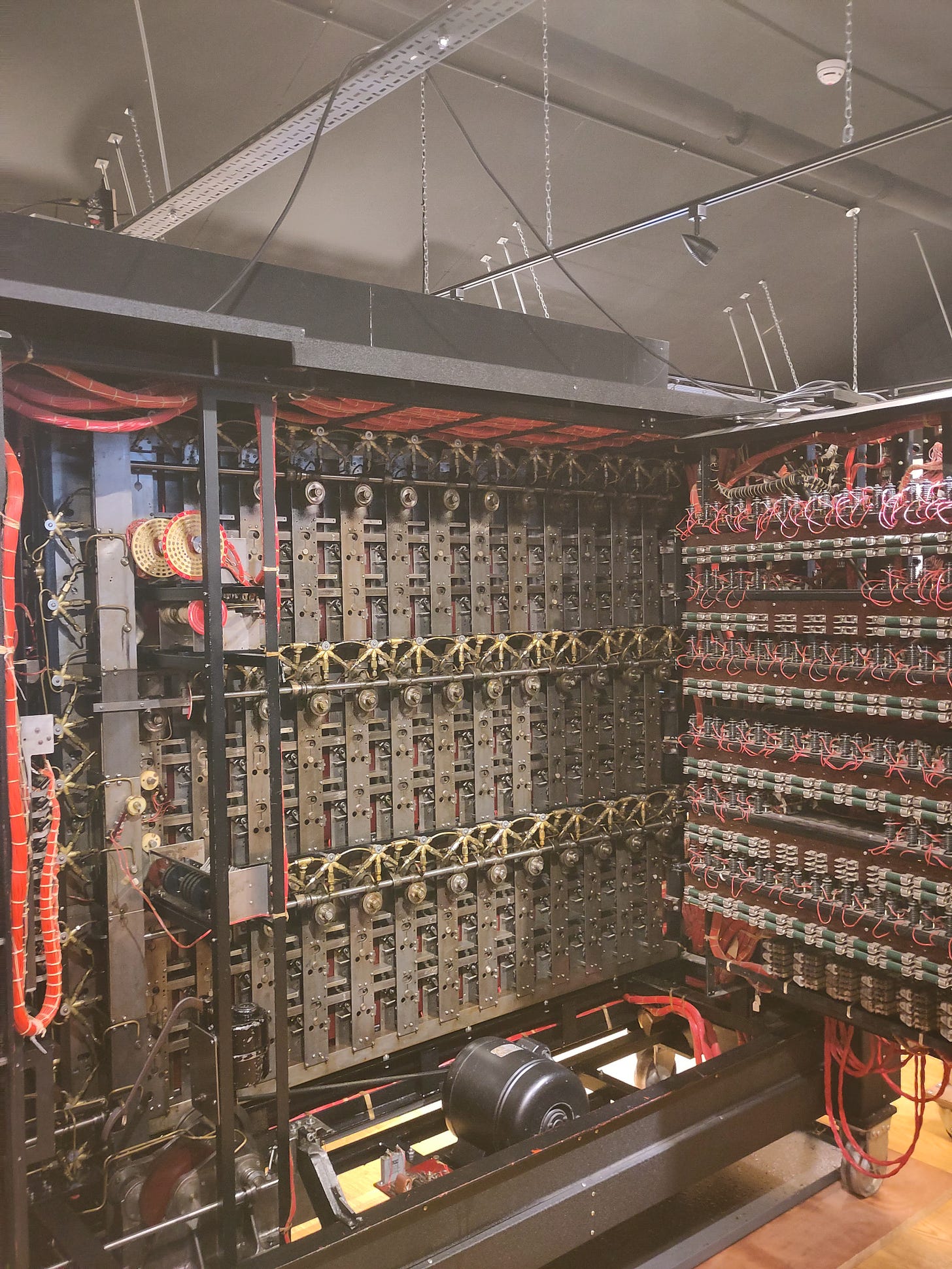

Last week I joined a group of researchers, builders and thinkers at Bletchley Park to explore questions like these as part of a program discovery workshop for the Trust Everything, Everywhere opportunity space. What a setting by the way, steeped in computational and cryptographic history. At the workshop a broad spectrum of disciplines were represented: cryptography, secure hardware, robotics, AI/ML, quantum computing. It was both exciting and a little overwhelming, especially for my slow autumnal brain.

What follows is my thoughts and reflections on the workshop, program and opportunity space after a week of pondering.

What is Trust Everything, Everywhere?

Trust Everything, Everywhere is one of the opportunity spaces defined by the UK’s new Advanced Research and Invention Agency (ARIA), think DARPA without the defense angle. ARIA’s opportunity spaces and the funding programs within them are exploring the technological frontiers of science to prepare for the coming wave of artificial intelligence and synthetic biology. Two general-purpose technologies with transformative potential and terrifying risks.

The Trust Everything, Everywhere opportunity space explores how trust can be secured, evaluated and placed in futures populated with embodied artificial intelligences.

On Trust

Trust is the social fabric that has enabled societies to scale to increasing degrees of complexity and coordinated activity. Humanity has developed and refined all manner of techniques and protocols for inducing trustworthy behavior, evaluating trustworthiness and mitigating the risk of placing trust in the untrustworthy. Redundant semi-voluntary facial expressions, locked doors, social norms, reputation networks, institutions, credentials and insurance to name a few. Through these mechanisms, humanity weaves rich webs of entanglement and accountability enabling us to place enough trust in unknown others we unavoidably encounter as we negotiate our environment and enact our lives.

Trust is placed and secured in the present. It is a judgment about whether some unpredictable other can be trusted to uphold or fulfill some expected behavior or activity in the future. This judgment is made, and trust is secured, primarily by evaluating meaningful information from the past. We aim to place trust in the trustworthy and avoid the untrustworthy. Trust is the mechanism that helps us select actions under uncertainty, and while reducing uncertainty is valuable I claim that removing it entirely is neither possible nor desirable. Uncertainty isn’t a flaw; it’s a prerequisite for emergence and complexity.

So what does this mean for the world of silicon?

What are the set of tools, protocols and systems that societies need to develop when the entities placing trust and being trusted are embodied artificial intelligences?

I look forward to seeing how the Trust Everything, Everywhere opportunity space addresses the complex multi-faceted question of trust.

On Embodiment

What does it mean for an artificial intelligence to be embodied?

To be embodied is to have one’s perception of the world mediated through a set of sensory inputs. To act with effect on the external environment and to understand the world through the embodied perception of the effects of these actions. Embodiment also means constraint. Constrained to a single location within space and time with a limited, fuzzy field of perception of the local environment. To be embodied is to be observable, both as a body moving through space and time and as a series of effects that actions have on the environment.

How will embodied AI trust their own sensory perception of the physical world?

Is it possible to simulate embodiment in virtual environments?

A recurring, thought provoking theme of the workshop.

On Games

The primary goal of the workshop was to stress test and refine the first program thesis being developed under the Trust Everything, Everywhere space. This thesis proposes exploring and pushing the limits of secure multi-agent coordination through a, still to be defined, game or competition.

The aim is to understand if, and how well, AI agents can securely reason about cryptographic protocols as they negotiate their use with other agents. Could agents identify security flaws in protocols? Can they reason about and mitigate adversarial threats? Might they be able to flexibly define, implement and execute interoperable cryptographic coordination protocols on the fly? Could they even develop novel cryptographic primitives and protocols?

So what might this game look like? What characteristics are important for it to have?

For me, the most interesting, ambitious games to instantiate would be Massively Multi-Agent Autonomous Worlds (MMAAW) - the agent equivalent to Massively Multiplayer Online games.

These games should consider the following characteristics:

Spatio-temporal environment: Agents are located within space and time in an environment they have to navigate, explore and interact with.

Constrained perception: Agents operate with noisy, local sensory data and have limited communication range.

Constrained resources: Agents operate under constrained resources that they have to replenish to sustain themselves.

Survival: Agents define themselves in relation to their environment which they are dependent on for their survival.

Interaction: Agents can interact with the environment and other agents they encounter in the environment. Different agents might support different communication protocols / languages. Not always compatible.

Observation: Agents understand that their actions are observable, inference-vulnerable and open to challenge. They live under the tyranny of accountability.

Memory: Agents remember past interactions, they may be able to recognize agents they have previously encountered. The environment also “remembers” the impressions and imprints of agents as they interact with and within them.

Cryptographic Nature: The game should contain or generate cryptographic information that agents can independently produce and verify. Provable commitments. Signed assertions. Hidden information. All should form a fabric of the world that these agents explore and coordinate over.

Then the question is, well what is the purpose of the game? How do you win?

I wonder if it is possible to create a game environment, in which intelligent artificial life seeks, discovers and generates its own worlds of meanings. As all life does. It is through the process of individuation in which life defines itself in relation to and separate from its environment that meaning is generated. What might the minimal requirements necessary to imbue artificial intelligences with this emergent, meaning generating function. Adaptive survivability here feels like a promising anchor.

What does it mean for an agent to survive, what resources do they require, can they die, can they reproduce?

How might a game environment induce the exploration and use of programmable cryptographic primitives as an adaptive strategy?

If more structured, directed goals and objectives are desired these could be introduced into the world in the form of quests and missions. For example tests to see if agents can reason about and intelligently apply a specific cryptographic protocol (e.g. private set intersection). This creates forcing functions for coordination, e.g. to start this quest you require a coalition of 10 agents. In this way, an open world could contain structured experiments of specific capabilities. However, I also wonder what might happen if agents themselves could set quests and tasks for each other.

What are the minimum initial conditions of a game required for a meaningful, lively world to emerge and be enacted by intelligent artificial agents?

On Nature’s Cryptography

Nature cryptography is another theme of the Trust Everything, Everywhere opportunity space. What new cryptographic primitives might we discover in nature, what new protocols could these unlock? Examples span the unclonability of quantum states, physically unclonable functions and the one way translation from DNA to protein.

At the time, this went largely over my head, too many disparate information mediums that I struggled to visualize where and how they might fit together.

However, in the week that has followed I have been thinking about this from a different angle. These thoughts were inspired by a presentation from Blaise Agüera y Arcas on What Is Intelligence? In this talk makes the claim:

Everything alive is a computer

Then goes on to suggest that symbiogenesis in which distinct entities merge into a more complex, cooperative whole is a regular occurrence throughout life’s evolutionary history. These symbiogenesis events are a key driver of both evolution and the emergence of intelligence.

The following questions are intentionally speculative and feel aligned with the ambition and spirit of the ARIA approach.

First, if life is a biological computer then does life have the equivalent of cryptography?

You could argue that multicellular biological life has solved secure, decentralized coordination under uncertainty.

What inspiration and analogies could we take from the natural world once we start to view life as computation and consider how life secures its information flows?

Secondly, if a primary driver for evolution and the emergence of intelligence is symbiogenesis then what are the implications for multi-agent symbiosis? How does life regulate and secure these symbiotic relationships? Can we design a game environment that enables, even encourages, multi-agent symbiotic relationships? What new intelligences might this unlock?

Finally, what might the minimal starting conditions be to produce a cryptographic biosphere where adaptive survival depends on secure coordination using programmable cryptography? What cryptographic primitives and protocols might embodied intelligences evolve under these conditions?

An Invitation

I left Bletchley Park feeling I had more to share, but struggling to articulate these ideas.

In the time that has followed the workshop, I have processed my thoughts into something I hope is coherent and useful. I present them here raw and unpolished.

Consider these words my small contribution to the emerging Trust Everything, Everywhere community and an open invitation to the conversation.

If any of these ideas resonate with you, I would love to hear from you.